Provisional list of accepted papers and posters

The list of invited talks is here.

In this paper we describe how information processing constructs originating in AI have become part of the Attachment Theory tool kit. We survey the early influence of AI within the theoretical framework that John Bowlby formed as the foundation of Attachment Theory between the 1950s and 1980s. We then review recent work which has built upon Bowlby's framework and is concerned with modelling and simulating attachment phenomena. We conclude by discussing some possible advantages that might arise from incorporating recent work on Bayesian arbitration into attachment control system models.

Biologically inspired robotics is a well known approach for the design of autonomous intelligent robot systems. Very often it is assumed that biologically inspired models successfully implemented on robots offer new scientific knowledge for biology too. In other words, robots experiments serving as a replacement for the biological system under investigation are assumed to provide new scientific knowledge for biology. This article is a critical investigation of this assumption. We begin by clarifying what we mean by "new scientific knowledge." Following Karl Popper's work the The Logic of Scientific Discovery we conclude that in general robotic experiments serving as replacement for biological systems can never directly deliver any new scientific knowledge for biology. We further argue that there is no formal guideline which defines the level of "biological plausibility" for biologically inspired robot implementations. Therefore, there is no reason to prefer some kind of robotic setup before others. Any claimed relevance for biology, however, is only justified if results from robotic experiments are translated back into new models and hypotheses amenable to experimental tests within the domain of biology. This translation "back" into biology is very often missing and we will discuss popular robotics frameworks in the context of Brain Research, Cognitive Science and Developmental Robotics in order to highlight this issue. Nonetheless, such frameworks are valuable and important, like pure mathematics, because they might lead to new formalisms and methods which in future might be essential for gaining new scientific knowledge if applied in biology. No one can tell, if and which of the current robotics frameworks will provide these new scientific tools. What we can already say--the main message of this article--is that robot systems serving as a replacement for biological systems won't be sufficient for the test of biological models, i.e. gaining new scientific knowledge in biology.

Abstract. Human adults have relatively sophisticated cognitive abilities, and manage vast quantities of diverse knowledge. The amount of data in the genome, and the differences with other species, show that a relatively small amount of information must code a system that can bootstrap itself to this high level of sophistication. How this bootstrapping process works remains largely a mystery. The technique of computational modelling opens up the possibility of developing a complete model of human cognitive development which could simulate the whole developmental sequence, starting with infancy. Existing computational models of infant development however typically only model one episode in one area of competence, and these individual episodes have not been linked up. This paper looks at what work needs to be done to take forward the idea of attempting to explain cognitive development at a level which could account for longer sequences of development. We argue that in order to understand the developmental processes underlying longer sequences we first need to determine what these possible sequences are, in detail; existing knowledge of such sequences is quite sketchy. The paper identifies the need to discover a directed graph of behaviours describing all the ancestors of sophisticated behaviours. The paper outlines some experiments that may help to discover such a graph.

A simple approach to route following is to scan the environment and move in the direction that appears most familiar. In this paper we investigate whether an approach such as this could provide a framework for studying visually guided route learning in ants. As a proxy for familiarity we use the learning algorithm Adaboost with simple Haar-like features to classify views as either part of a learned route or not. We show the feasibility of our approach as a model of ant-like route acquisition by learning a non-trivial route through a real-world environment using a large gantry robot equiped with a panoramic camera.

Classically, visual attention is assumed to be influenced by visual properties of objects, e. g. as assessed in visual search tasks. However, recent experimental evidence suggests that visual attention is also guided by action-related properties of objects ("affordances")[1, 2], e. g. the handle of a cup affords grasping the cup; therefore attention is drawn towards the handle. In a first step towards modelling this interaction between attention and action, we implemented the Selective Attention for Action model (SAAM). The design of SAAM is based on the Selective Attention for Identification model (SAIM)[3]. For instance, we also followed a soft-constraint satisfaction approach in a connectionist framework. However, SAAM's selection process is guided by locations within objects suitable for grasping them whereas SAIM selects objects based on their visual properties. In order to implement SAAM's selection mechanism two sets of constraints were implemented. The first set of constraints took into account the anatomy of the hand, e. g. maximal possible distances between fingers. The second set of constraints (geometrical constraints) considered suitable contact points on objects by using simple edge detectors. We demonstrate here that SAAM can successfully mimic human behaviour by comparing simulated contact points with experimental data.

Can the sciences of the artificial positively contribute to the scientific exploration of life and cognition? Can they actually improve the scientific knowledge of natural living and cognitive processes, from biological metabolism to reproduction, from conceptual mapping of the environment to logic reasoning, language, or even emotional expression? To these kinds of questions our article aims to answer in the affirmative. Its main object is the scientific emergent methodology often called the "synthetic approach", which promotes the programmatic production of embodied and situated models of living and cognitive systems in order to explore aspects of life and cognition not accessible in natural systems and scenarios. The first part of this article presents and discusses the synthetic approach, and proposes an epistemological framework which promises to warrant genuine transmission of knowledge from the sciences of the artificial to the sciences of the natural. The second part of this article looks at the research applying the synthetic approach to the psychological study of emotional development. It shows how robotics, through the synthetic methodology, can develop a particular perspective on emotions, coherent with current psychological theories of emotional development and fitting well with the recent "cognitive extension" approach proposed by cognitive sciences and philosophy of mind.

Can machines ever have qualia? Can we build robots with inner worlds of subjective experience? Will qualia experienced by robots be comparable to subjective human experience? Is the young field of Machine Consciousness (MC) ready to answer these questions? In this paper, rather than trying to answer these questions directly, we argue that a formal definition, or at least a functional characterization, of artificial qualia is required in order to establish valid engineering principles for synthetic phenomenology (SP). Understanding what might be the differences, if any, between natural and artificial qualia is one of the first questions to be answered. Furthermore, if an interim and less ambitious definition of artificial qualia can be outlined, the corresponding model can be implemented and used to shed some light on the very nature of consciousness. In this work we explore current trends in MC and SP from the perspective of artificial qualia, attempting to identify key features that could contribute to a practical characterization of this concept. We focus specifically on potential implementations of artificial qualia as a means to provide a new interdisciplinary tool for research on natural and artificial cognition.

POSTERS

Here we investigate an innate movement strategy for view-based homing using snapshots containing only the skyline. A simple method is developed in simulation before being adapted for use on a real robot.

Biological Motion perception is the ability to perceive gestalts of moving human or animal figures from two-dimensional projections of their joints. These projections are very salient stim- uli. A neural network system for generation of such sequences and transitions between them is described. The network oscillates several sequential motion patterns learned from human motion capture data. Realistic transitions between them can then be emergently generated while switching between modes of oscillation (e.g., from one type of gait to another). This system might be further explored as a cognitive model for human motion generation showing that sevral motions can be stored in exactly the same structure. It can also be exploited as a controller for robot, or animated characters' human-like motion.

We observed the vocal behaviour of prelinguistic infants in their natural habitat, a nursery environment. We identified a number of contexts that reliably triggered vocalizations in the infants and conducted an acoustic analysis of the different call types. Our results show that prelinguistic infants produce different categories of vocalizations. Categorical production has also been observed in other primate species, for example Vervet or Diana monkeys. Computer modelling, particularly neural networks, could aid the analysis of such data by modelling the perceptual processes that are used by the recipient to categorize these calls.

This paper presents the "Mixed Societies of Robots and Vertebrates" project that is carried out by the Mobile Robotics group at EPFL, Switzerland in collaboration with the Unite d'Ecologie Sociale at ULB, Belgium. The idea of the project is to study behavior in young chicks of the domestic chicken (Gallus gallus domesticus) by using mobile robots able to interact with animals and accepted as members of an animal group. In contrast to other studies where relatively simple robotic devices are used to test specific biological hypotheses, we aim to design a multipurpose robot that will allow ethologists to study more sophisticated phenomena by providing a wide range of sensors and sufficient computational power. In this paper we present a PoulBot robot and give an example of the ethological experiment, where it is used to study the social aggregation in chick groups.

We developed a system which purpose is to obtain a robot able to emulate the strategies used by a subject facing a problem-solving task. We have been able to solve this problem within a strongly constrained setting in which the subject's strategy can be induced. Our solution encompasses the solution of another problem, namely how to close the raw-data/trained-system/raw-data loop. An important aspect of inducinsubjectan strategies was becoming able to recognise changes of strategy. The H-CogAff from which our control architecture is inspired (though we do not yet attempt to completely emulate it) provides a handy trigger for strategy changes: the global alarm system. We show that implementing it improves the robot's performance.

Artificial Intelligence (AI) and Animal Cognition (AC) share a common goal: to study learning and causal understanding. However, the perspectives are completely different: while AC studies intelligent systems present in nature, AI tries to to build them almost from scratch. It is proposed here that both visions are complementary and should interact more to better achieve their ends. Nonetheless, before efficient collaboration can take place, a greater mutual understanding of each field is required, beginning with clarifications of their respective terminologies and considering the constraints of the research in each field.

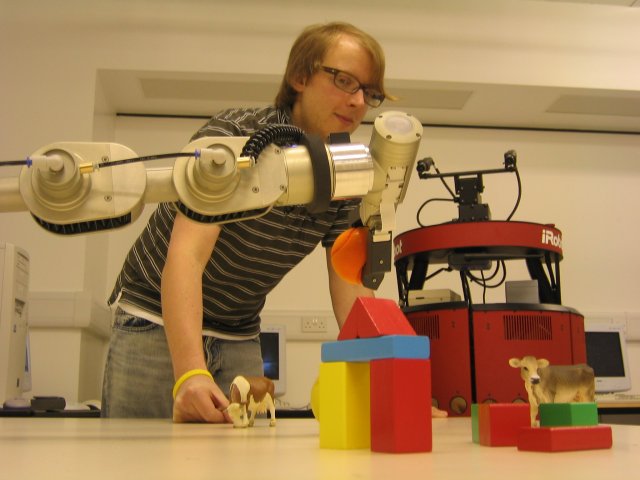

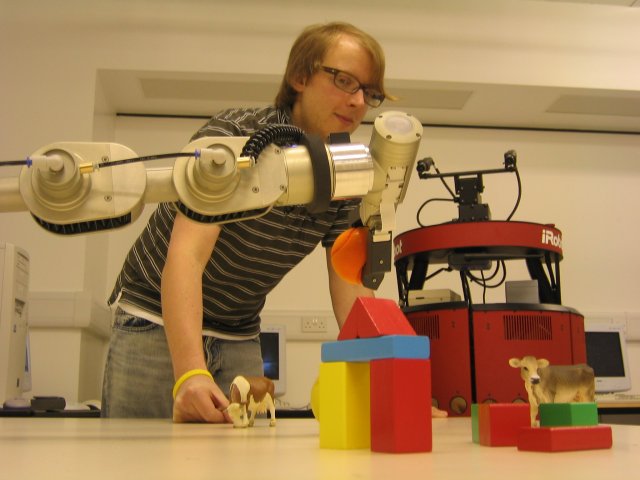

There is an abundance of different robot platforms in use in various fields of robotics. From small, inexpensive robots for experiments in small arenas and mazes to large human-sized robots for indoor scenarios or rough outdoor terrain. When looking at platforms with manipulation capabilities the classical "tons on wheels" design is still the most popular because it is easy to control but poses stability problems when mounting an arm on top, especially for smaller platforms. Humanoids are becoming increasingly popular but the control of so many degrees of freedom is still a challenging research topic. With Corvid we present a research platform that targets small to mid-sized mobile manipulation scenarios for teaching and research. It is intended as a robotic tool for cognitive scientists who want to test their theories in a physical system. A tracked base provides stable support for a 4 DOF manipulator (5th is optional) with gripper and camera. The platform is comparatively inexpensive and offers solid onboard processing power, wireless connectivity and good extensibility via standard interfaces. As such Corvid offers a system that is both versatile enough to support interesting research questions, but also manageable and cheap enough to pose a low entry barrier for usage. We will provide a webpage which summarises all parts and instructions at www.acin.tuwien.ac.at/1/research/v4r

Symbols are the essence of a language and need to be grounded for effective information passing including interaction and perception. A language is grounded in a multimodal sensory motor experience with the physical world. The system described in this paper acts in a simple virtual world. The system uses multimodal interaction by using vision and language, to interact with the outer world. Two simulations are given in which the system learns to categorise five basic shapes. In the second simulation, the system labels these categories, grounding them. Both simulations performed work perfectly on all tests.

- - - - - - - - - -